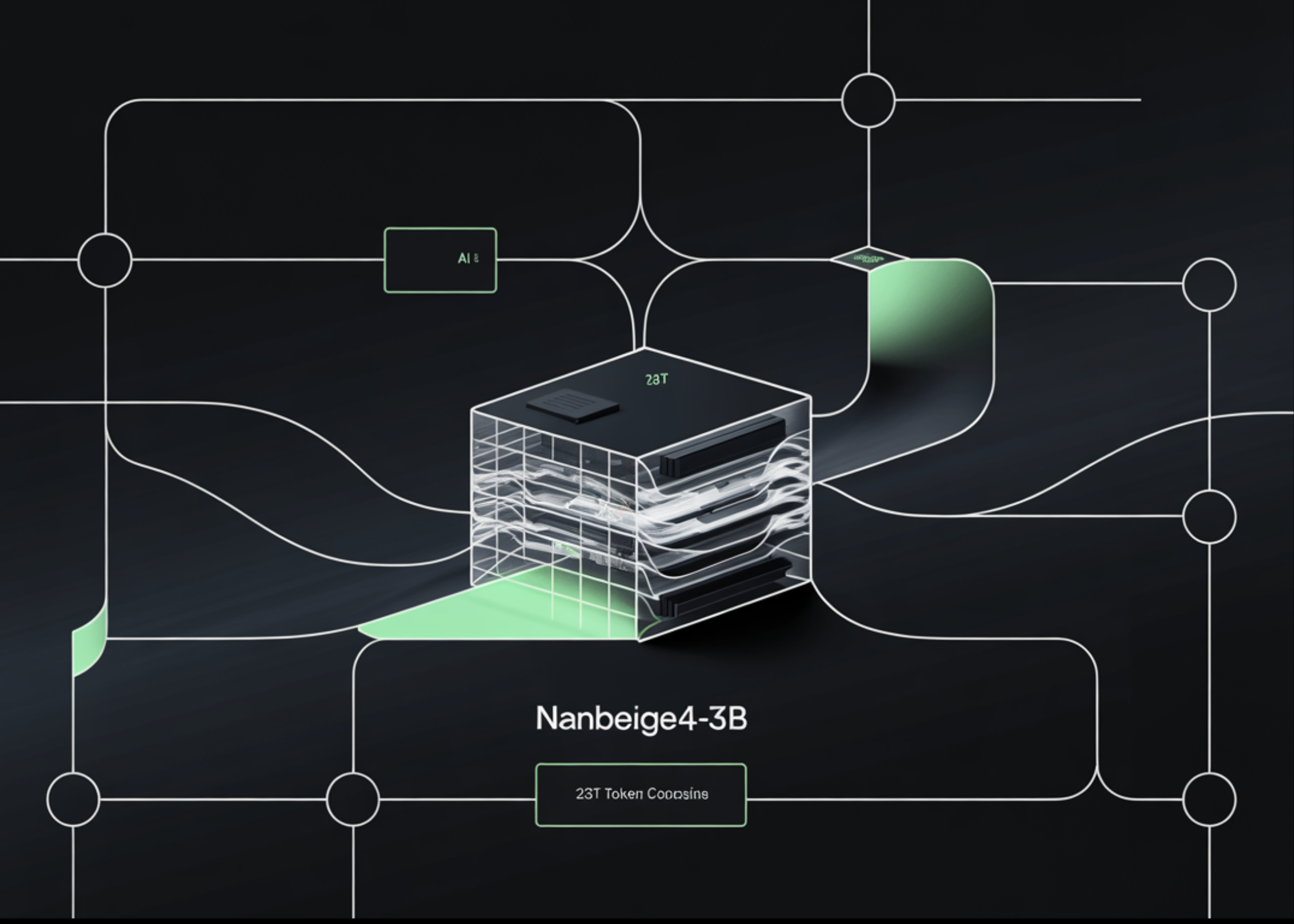

Boss Zhipin's Nanbeige Lab just proved that smarter training beats brute force scaling. Their 3B parameter model matches 30B-class reasoning through intensive data curation and a 23T token pipeline - showing that efficiency innovations might matter more than throwing more parameters at the problem This could reshape how we think about deploying capable models in resource-constrained environments.

Boss Zhipin's Nanbeige Lab just proved that smarter training beats brute force scaling. Their 3B parameter model matches 30B-class reasoning through intensive data curation and a 23T token pipeline - showing that efficiency innovations might matter more than throwing more parameters at the problem 🧠 This could reshape how we think about deploying capable models in resource-constrained environments.